Apple releases a prototype of an artificial intelligence tool that animates images based on text descriptions

Apple’s in-house researchers have presented Keyframer, a prototype of an artificial intelligence generative animation tool that allows you to add motion to 2D images by describing how they should be animated.

In a research paper, Apple said that large language models (LLMs) are “under-researched” in animation, despite the potential they have demonstrated in other creative mediums such as writing and image generation. The LLM-based Keyframer tool is cited as one of the examples of this technology, The Verge reports.

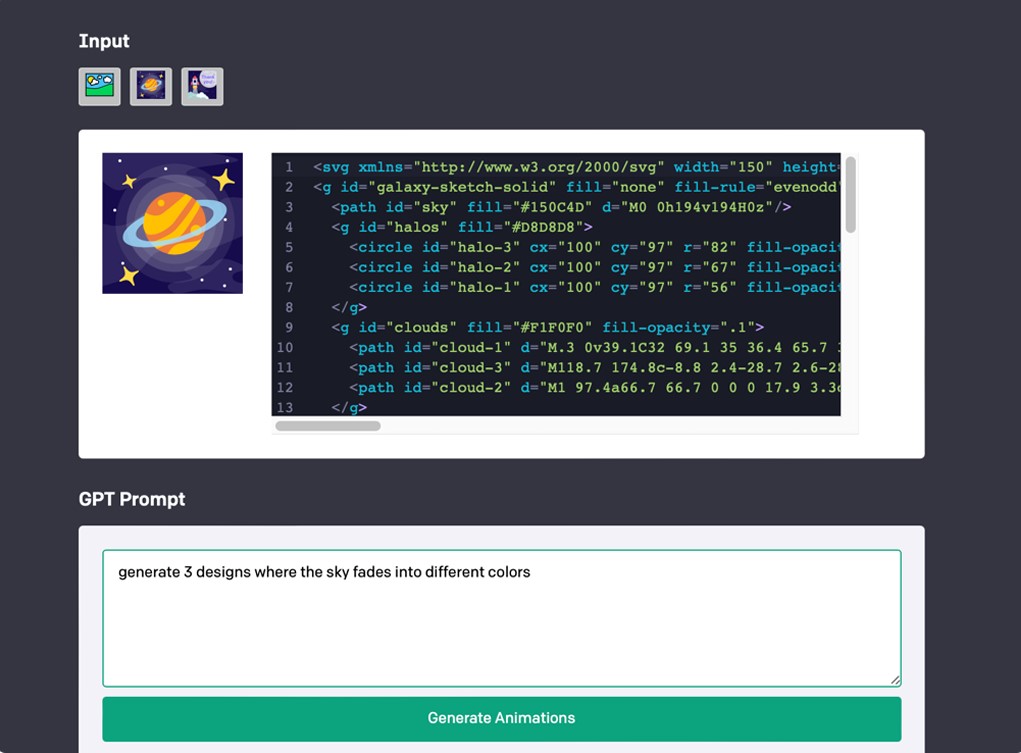

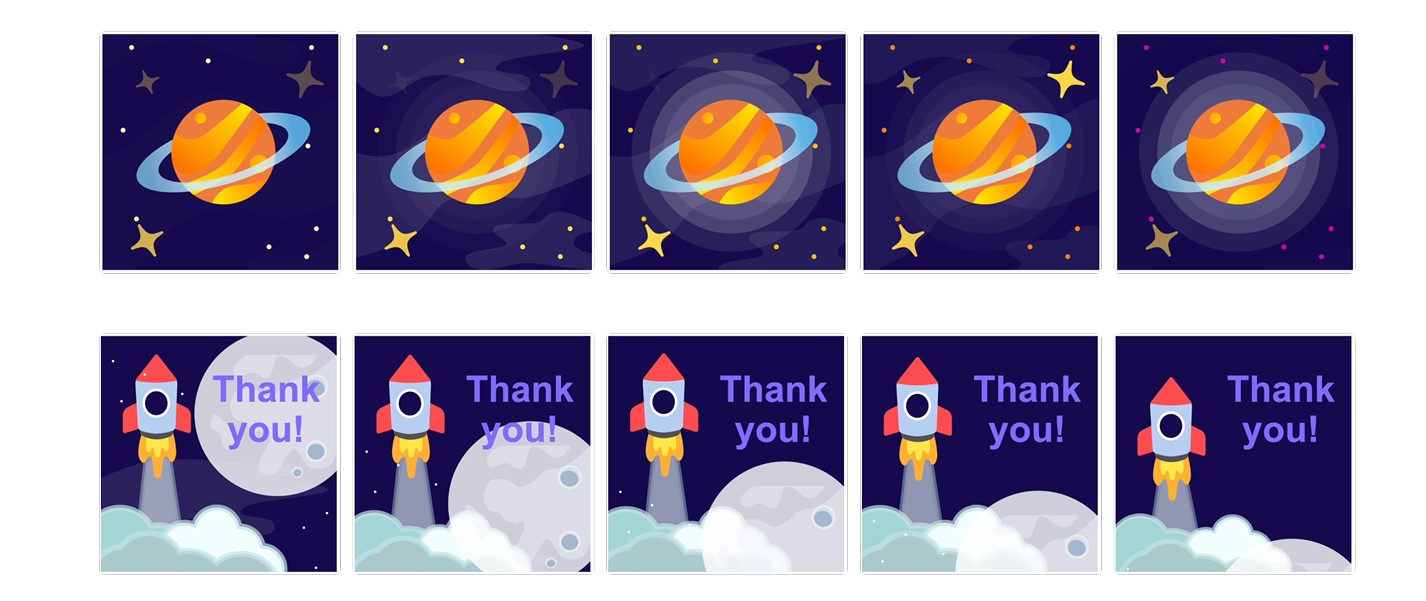

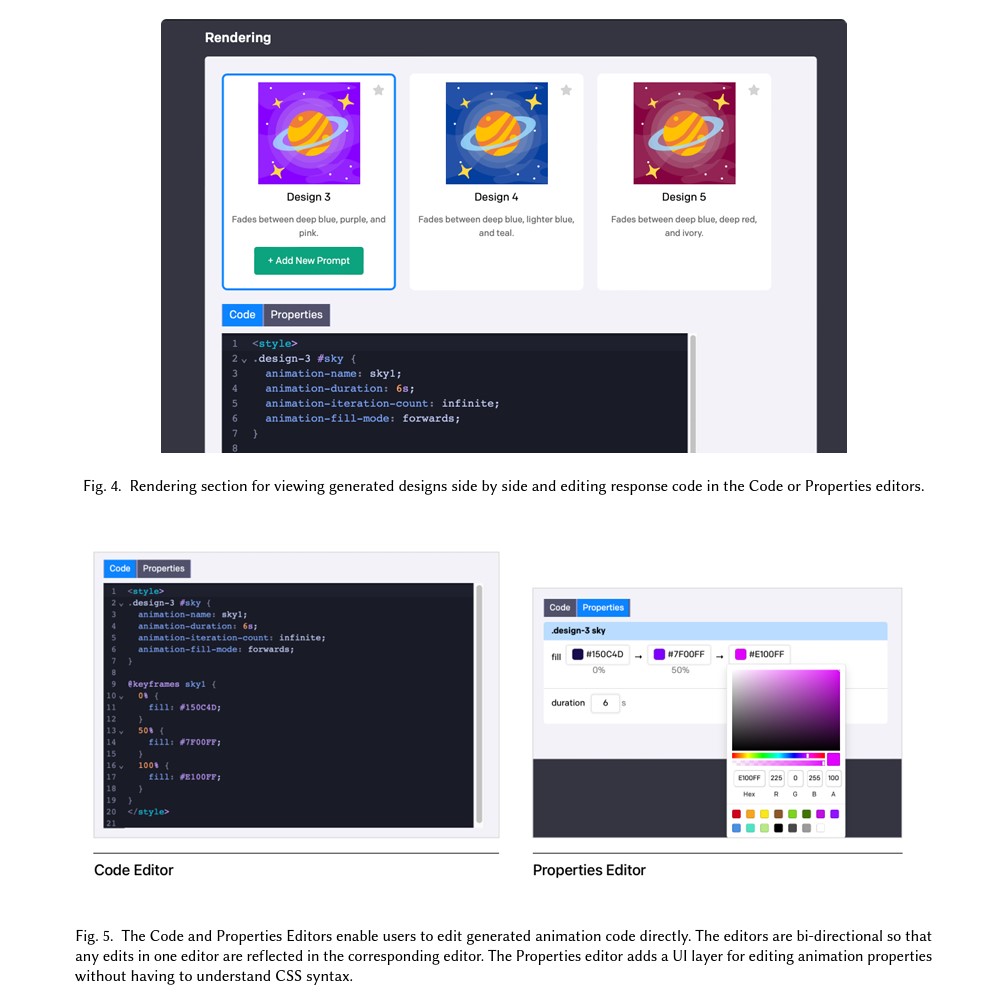

Using OpenAI’s GPT4 as a base model, Keyframer can accept scalable vector graphics (SVG) files – an illustration format that can be modified without compromising quality – and generate CSS code to animate images based on text prompts. The user uploads an image, enters a prompt such as “let the stars twinkle,” and clicks the Generate button. The examples given in the study show how the illustration of Saturn can switch to different backgrounds or show stars disappearing and appearing in the foreground.

Users can create multiple animation designs in one package and customize properties like color codes and animation duration. No coding experience is required as Keyframer automatically converts these changes to CSS, although the code itself is fully editable.

However, there is still a long way to go. Keyframer is not yet publicly available, and the user study as part of Apple’s research paper included only 13 people who could only use two simple, pre-selected SVG images while experimenting with the tool.

Apple is also careful to mention its limitations, pointing out that Keyframer focuses on web animations such as loading sequences, data visualizations, and animated transitions. In contrast, the animation you see in movies and video games is too complex to create with descriptions alone – at least not yet.