Faster, Cheaper, More Powerful: DeepSeek Presents New Version of Its Language Model

Chinese technology company DeepSeek has introduced an improved version of its large artificial intelligence language model DeepSeek-V3-0324, just three months after releasing the first V3 version in December 2024.

The updated model features enhanced efficiency and expanded capabilities, including the ability to create visually appealing web pages and high-quality reports in Chinese.

DeepSeek-V3-0324 stands out with its reduced computational requirements for training, shortened training time, and more affordable API pricing, while maintaining high performance compared to competitors such as OpenAI’s GPT.

One of the key features of the new model is the absence of a “thinking” phase, allowing it to provide responses promptly without delays on complex tasks, unlike the previous DeepSeek R1 model.

The parameter size of the new version is 685 billion, making it one of the largest publicly available language models to date.

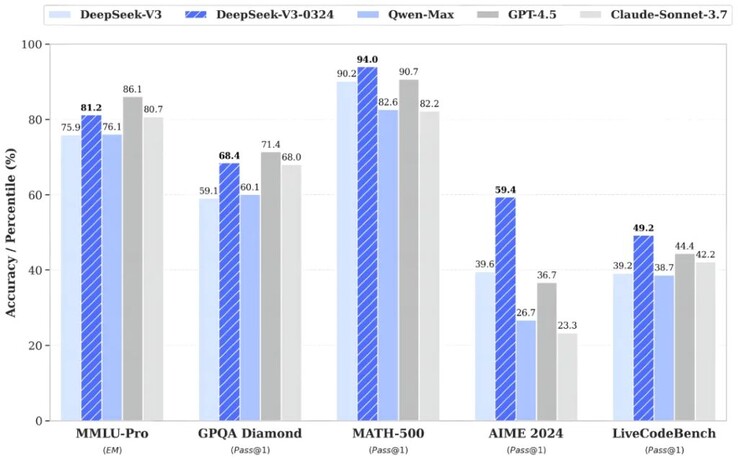

DeepSeek-V3-0324 has shown improvements in AI test results by 5.3-19.8% compared to the previous version, approaching the performance level of leaders such as GPT-4.5 and Claude Sonnet 3.7.

Additionally, the updated version has shown significant progress in creating web pages, as well as in searching, writing, and translating texts in Chinese.

To fully utilize the DeepSeek-V3-0324 model, users will need at least 700 GB of free disk space and several Nvidia A100/H100 graphics processors. However, there are also simplified versions of the model that can run on a single GPU, such as the Nvidia 3090.