Israel uses artificial intelligence to select targets in Gaza Strip

The Israeli military used artificial intelligence to help select bombing targets in Gaza. This could have potentially led to a deterioration in the accuracy of the strikes and the killing of many civilians. This is stated in the publications +972 Magazine and Local Call.

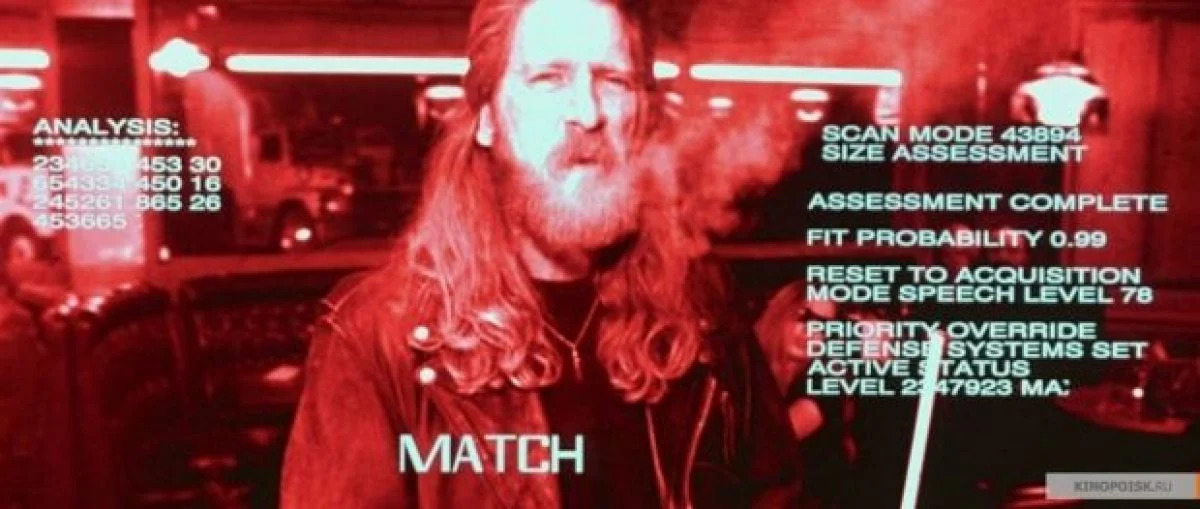

The system, called Lavender, was developed after the October 7 Hamas attacks. At the peak of its development, Lavender reached 37 thousand. Palestinians in Gaza as suspected “Hamas militants” and “authorized” their murders.

The Israeli armed forces denied the existence of such a kill list. The spokesperson said that artificial intelligence was not used to identify suspected terrorists, but did not deny the existence of the Lavender system, which the spokesperson called “simply tools for analysts in the process of identifying targets.” The analysts “should conduct independent reviews to verify that the identified targets meet the relevant definitions under international law and the additional restrictions provided for in the IDF guidelines,” the spokesman said.

However, Israeli intelligence officials told +972 and Local Call that they were not required to conduct independent verification of Lavender targets before bombing them, and instead served as a “rubber stamp” for “machine decisions.” In some cases, the officers’ only role in the process was to determine whether the target was male.

To create the Lavender system, information about known operatives of Hamas and Palestinian Islamic Jihad was entered into the dataset. In addition, Lavender’s training also used data on people closely associated with Hamas, such as employees of the Gaza Ministry of Internal Security.

Lavender was trained to identify “features” associated with Hamas operatives, such as being in a WhatsApp group with a known militant, changing cell phones every few months, or changing addresses frequently. This data was then used to rate other Palestinians in Gaza on a scale of 1 to 100 based on how similar they are to the known Hamas fighters in the original dataset. People who reached a certain threshold were then marked as targets for attacks. This threshold has been constantly changing, “because it depends on where you set the bar for who is a Hamas operative,” said one military source.

According to the sources, the system had an accuracy rate of 90%. Some of the people Lavender identified as targets had names or nicknames identical to those of known Hamas operatives. Others were relatives of Hamas operatives or people who used phones that once belonged to Hamas fighters.

According to informed sources, intelligence officers were given broad powers when it came to civilian casualties. At the same time, possible collateral damage among civilians was assumed: 15-20 people when targeting lower-level Hamas operatives and “hundreds” for senior Hamas officials.

Suspected Hamas militants were also targeted in their homes, as determined by the Where’s Daddy? system. According to officers, this system put targets identified by Lavender under constant surveillance. They were tracked until they reached their homes, at which point they were bombed, often with their entire families. Sometimes, however, officers bombed houses without checking to see if there were targets inside, killing civilians in the process.

Additionally, it is reported that the Lavender system is an expansion of Israel’s use of surveillance technologies against Palestinians in both the Gaza Strip and the West Bank.