Human emotions, moods, and feelings are increasingly seen as a key parameter for improving human interaction with various machines and systems. However, until now, their complex and ambiguous nature has made it difficult to accurately convey and use emotional information. We are talking about a new study that makes human emotion recognition real.

The technology, which can recognize human emotions in real time, was developed by Professor Jiyuno Kim and his research team at the Department of Materials Science and Engineering at UNIST (Ulsan National Institute of Science and Technology, one of four public universities in South Korea that conducts research in science and technology).

According to experts, this innovative technology can revolutionize various industries.

Previous attempts at emotion recognition

Researchers have long been interested in understanding and accurately extracting emotional information, but there is a problem that even a person is not always able to recognize their own or other people’s emotions. Psychologists and scientists talk a lot about emotional intelligence and teach how to develop it.

There are already several technological products that are used to recognize emotions. For example, one of the leading developers of emotion analysis systems, Boston-based Affectiva, founded by former MIT employees, has created one of the world’s largest emotion databases, consisting of more than 10 million facial expressions from 87 countries. Based on this data, the company has built many applications that can, for example, detect distracted and risky drivers on the roads or assess the emotional reaction of consumers to advertising.

Amazon, Microsoft, and IBM have developed their own emotion recognition systems, and in 2016, Apple bought Emotient, a startup that develops software for recognizing emotions in photos.

In China, there is an emotion recognition system called Taigusys with a product that can capture and identify facial expressions of company employees. Taigusys’ clients include Huawei, China Mobile, China Unicom, and PetroChina.

Difficulties of the process

Decoding and encoding emotional information is a big challenge due to the abstractness, complexity, and personalized nature of emotions.

According to Kate Crawford, a research professor at USC Annenberg and a senior researcher at Microsoft Research, researchers in the field of emotion have not come to a consensus on what emotion is, how it is formed and expressed within us, what its physiological or neurobiological functions may be, and how it is manifested under different stimuli. Although there is an automatic reading of emotions. The expert talks about the problems of reliability and accuracy of such developments:

The ongoing debate among scientists confirms the main weakness of this technology: universal detection is the wrong approach. Emotions are complex, they evolve and change depending on our culture and history-all the different contexts that exist outside of artificial intelligence.

Kate Crawford

Breakthrough in emotion recognition

To solve this problem, the research team has developed a multimodal human emotion recognition system that combines verbal and non-verbal data to effectively utilize full emotional information.

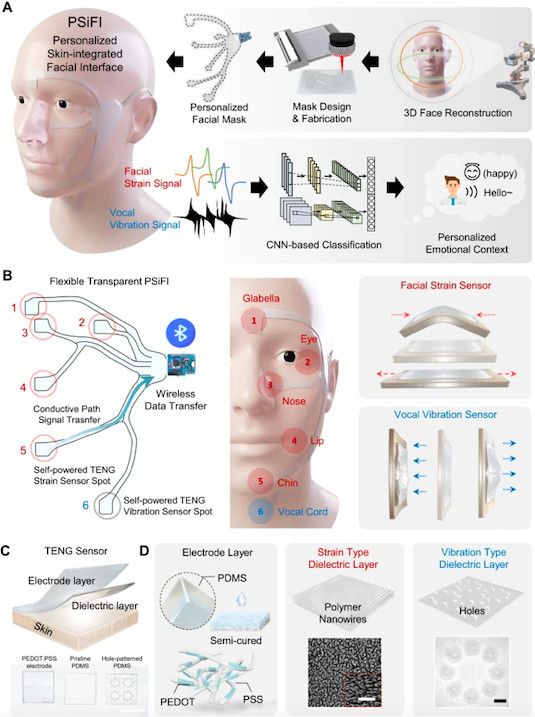

The basis of this development is the skin-integrated facial interface (PSiFI) system, which is autonomous, lightweight, and transparent. It is equipped with the first bi-directional triboelectric strain and vibration sensor that allows simultaneous perception and integration of verbal and non-verbal data. The system is fully integrated with the data processing circuitry for wireless data transmission, allowing for real-time emotion recognition.

The triboelectric effect is the phenomenon of electrification of bodies during friction. The effect is due to the establishment and breakdown of contact between bodies by friction. After contact is established, at least in some areas of the surfaces, the process of adhesion occurs – the surfaces of the bodies stick together.

Using machine learning algorithms, the technology demonstrates accurate recognition of human emotions in real time, even if people wear masks. The system was successfully used in a digital concierge program in the virtual reality (VR) environment.

With this system, real-time emotion recognition can be realized instantly and without complex measurement equipment. This opens up opportunities for any emotion recognition device in the future.