Apple has created a neural network that can “photoshop” images based on text descriptions

Apple researchers have developed a new artificial intelligence model that allows users to describe what they want to change in a photo in simple language. At the same time, you can correct images without even touching the photo editing software.

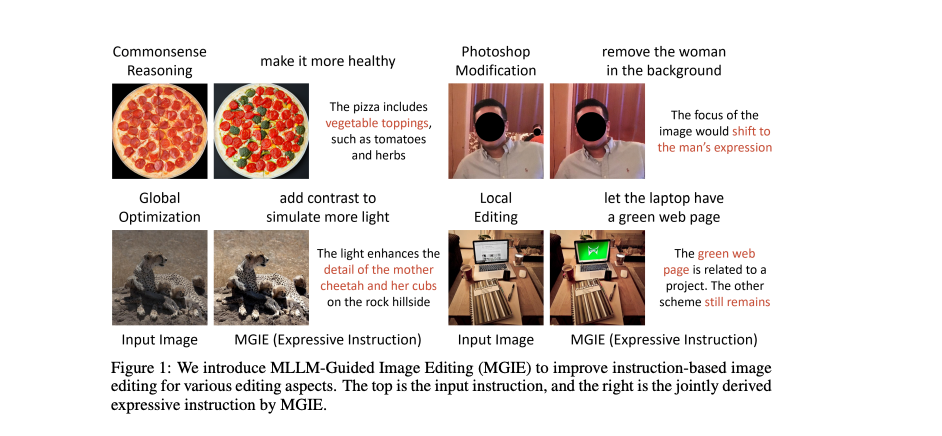

The MGIE (MLLM-Guided Image Editing) model, which Apple worked on in collaboration with the University of California, Santa Barbara, allows you to crop, resize, flip, and add filters to images using text prompts. This model can also be used for more complex image editing tasks, such as reshaping certain objects in a photo to give them a different shape or make them brighter.

MGIE combines two different types of multimodal language model usage. First, it learns to interpret user prompts. Then it “imagines” what the editing will look like (for example, a request for a bluer sky in a photo results in an increase in the brightness of the part of the image with the sky).

When editing a photo with MGIE, users simply need to print what they want to change in the image. For example, when editing an image of a pepperoni pizza, you can type in the prompt “make it healthier” and the model will add vegetable toppings. The photo of tigers in the Sahara looks dark, but after the models were told to “add more contrast to simulate more light,” the image became brighter.

“Instead of short but ambiguous instructions, MGIE detects explicit visual intent and leads to intelligent image editing,” the researchers say in their article.

Apple has made MGIE available for download via GitHub and has also released a web demo of Hugging Face Spaces. The company did not specify its future plans for this model.