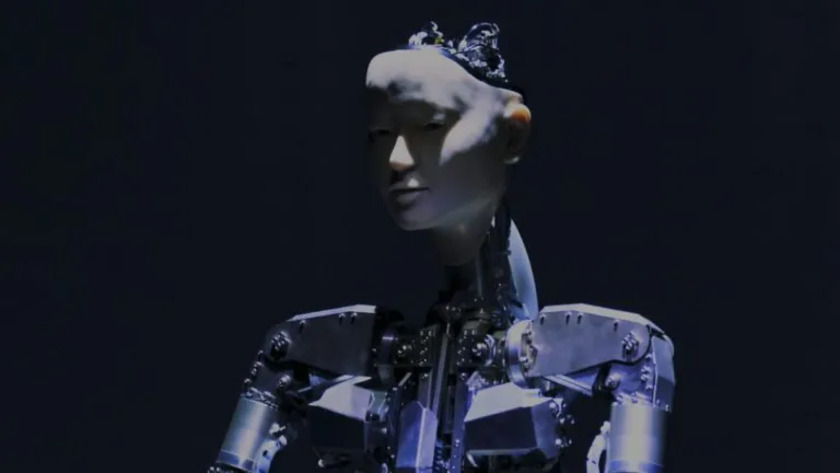

Japan creates a humanoid robot based on GPT-4: the result is impressive (video)

A team from the University of Tokyo has presented Alter3, a humanoid robot that can perform movements using the GPT-4 large language model (LLM).

Alter3 uses the latest Open AI tool to dynamically assume various poses, from selfie pose to ghost imitation, all without the need for pre-programmed database entries.

“Alter3’s response to spoken content through facial expressions and gestures is a significant advancement in humanoid robotics that can be easily adapted to other androids with minimal modifications,” the researchers say.

In the field of LLM integration with robots, the focus is on improving basic communication and modeling realistic reactions. Researchers are also delving into the capabilities of LLM to allow robots to understand and execute complex instructions, thereby increasing their functionality.

Traditionally, low-level robot control is tied to hardware and is beyond the competence of LLM corporations. This creates difficulties for direct management of LLM-based robots. Solving this problem, the Japanese team has developed a method of converting human movement expressions into code understandable for android. This means that the robot can independently generate sequences of actions in time without the need for developers to individually program each part of the body.

During the interaction, a person can give Alter3 commands like “Take a selfie with your iPhone.” Subsequently, the robot initiates a series of queries to GPT-4 to get instructions on the necessary steps. GPT-4 translates this into Python code, which allows the robot to “understand” and perform the necessary movements. This innovation allows Alter3 to move its upper body while its lower body remains stationary, attached to the stand.

Alter3 is the third iteration in the Alter series of humanoid robots since 2016, boasting 43 actuators responsible for facial expressions and limb movements, all powered by compressed air. This configuration provides a wide range of expressive gestures. The robot cannot walk, but it can imitate typical walking and running movements.

Alter3 also demonstrated the ability to copy human poses using a camera and the OpenPose framework. The robot adapts its joints to the observed postures and saves successful imitations for future use. Interacting with a human led to a variety of postures, which supports the idea that different movements come from imitating humans, just as newborns learn through imitation.

Before the advent of LLM, researchers had to carefully control all 43 actuators to reproduce a person’s pose or imitate behavior, such as serving tea or playing chess. This required numerous manual settings, but AI helped free the team from this routine.

“We expect Alter3 to effectively engage in dialog by demonstrating contextually relevant facial expressions and gestures. It has demonstrated the ability to mirror emotions, such as showing sadness or happiness in response, thereby sharing emotions with us,” the researchers say.