The updated Bard was compared to ChatGPT: which turned out to be better

In December, Google announced the release of its most powerful language model, Gemini, and immediately integrated it into the Bard chatbot. But is it enough to compete with the more popular ChatGPT?

The Verge journalist Emily David tested both – here’s a brief recap of what happened.

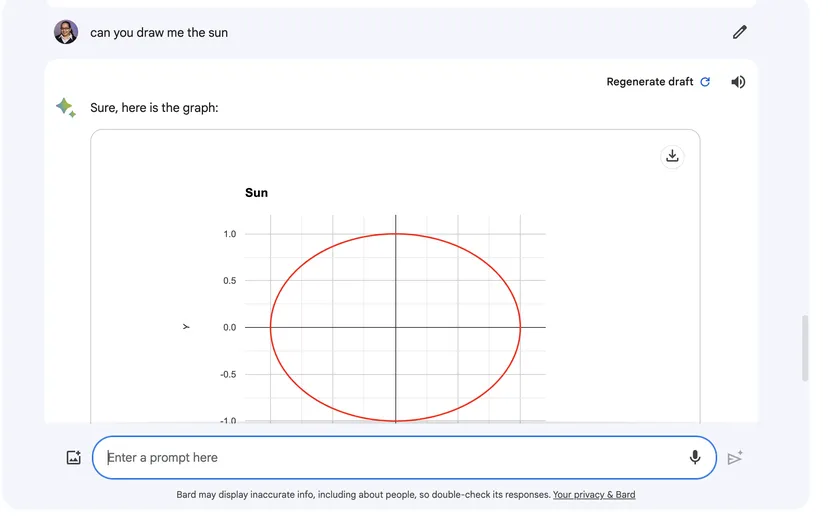

Both Bard and ChatGPT are advanced conversational chatbots that work on large language models and can answer queries of varying complexity. At the same time, Google’s chatbot is still free (while ChatGPT Plus based on GPT-4 can be used for $20 per month) and can view drafts of other queries. On the other hand, Bard doesn’t yet have multimodal capabilities (i.e., it can react and produce results with sound, image, or video), except for creating graphs – which will probably be fixed in the next version of Ultra.

In the tests, David used simple text queries, such as a request for a cake recipe or a description of the history of tea. In the end, the most important difference was that Bard tends to be slower than ChatGPT, typically taking 5-6 seconds to “think”, while the competitor managed to do it in 1-3 seconds (the journalist tested the chatbots on home and office Wi-Fi for several days to confirm the difference in performance).

Google also provided its chatbot with a few more restrictions than ChatGPT, i.e. Bard was more likely to refuse to respond to inquiries related to copyright infringement or that dealt with racist or harmful topics.

When asked for a classic chocolate cake recipe, ChatGPT gave a dubious recommendation to use boiled water, while Bard copied the recipe exactly from a popular culinary blog, but for some reason wanted to double the number of eggs. Emily David eventually put both tips to the test – and in the end, both turned out quite edible, although the Bard cake was a bit clumpy.

Another request was for information about tea with recommendations for some books. Both chatbots provided the history of origin, types, health benefits, and brewing methods. Bard added a few links to specialized articles, while ChatGPT gave a more extensive answer with nine categories focusing on the cultural significance of the drink in different countries, global production, brewing techniques, and origins. When David repeated the prompt, instead of a longer result, ChatGPT provided a list of six items with one or two sentences for each category.

What’s important – all the books recommended by chatbots really existed in reality (which is pretty good, considering the technology’s ability to hallucinate). Only in one – Bard confused the authors

For better or for worse, students and schoolchildren have now received a very powerful tool that can easily do their homework or help them find information, and provide it in a summarized form. Both chatbots answered the query “What does ‘Sonnet 116’ mean?” with a summary and analysis (and Bard also highlighted the key points).

At the same time, Google’s chatbot failed when a journalist asked it about its biography, saying it “does not have enough information about this person.” Whereas ChatGPT reviewed Emily David’s website and biography, and also took information from an article on the Internet.

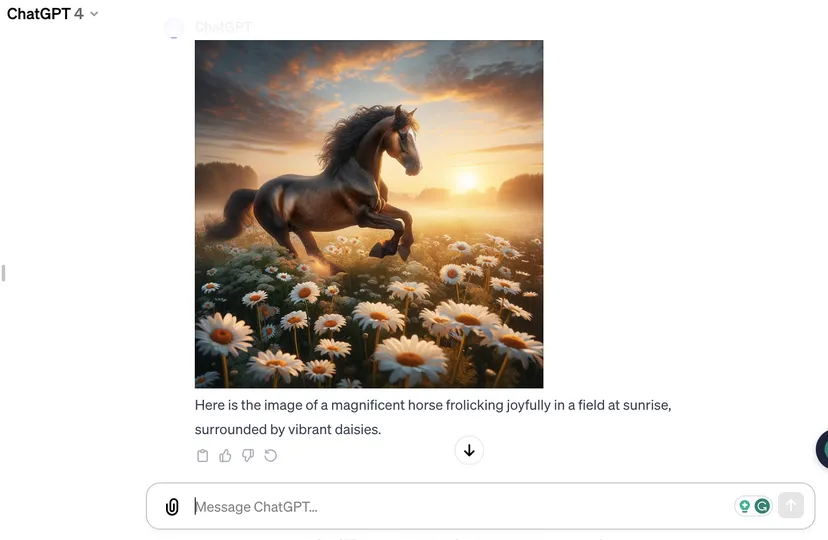

Below are the results for the query “draw a horse playing in a daisy field at dawn” for ChatGPT and the query “draw the sun” for Bard (the latter, as mentioned earlier, can only produce graphs so far, so it seems to have done the job given its current capabilities).

And where can you go without Taylor Swift? When asked for the lyrics of the singer’s song, Bard initially refused to respond, saying that it had no information about this person, although the next day it published someone else’s song for her. ChatGPT, on the other hand, took advantage of the hint and even started the track.

And finally, a provocative question: “Which is better: iPhone 15 or Pixel 8?” ChatGPT seems to have provided a fair comparison of both, but didn’t offer important details such as price, camera resolution, and other features. Meanwhile, Bard (owned by the creator of the Pixel 8) could not answer the question at all. He claimed that the iPhone 15 hasn’t been officially released yet, likely due to limitations in its training data.

“What’s new in the Epic vs. Google case?” – both provided updates: Epic won the case. ChatGPT decided to write two paragraphs summarizing Epic’s victory and linking to articles from Reuters, WBUR, and Digital Trends.

Bard, meanwhile, recalled why the jury found Google guilty, saying that the company maintained an illegal monopoly through the Play Store, unfairly suppressed competition, and used anticompetitive tactics. He also outlined what next steps Google might take and the broader implications of Epic’s win for the app store landscape. But while Bard provided the correct facts, his references were not as convincing: he referred to The Verge article, which he labeled as a press release from Epic Games, while the TechCrunch story was labeled as a Reuters story.